SCW Trust Agent: AI - Visibility and Governance for Your AI-Assisted SDLC

The widespread adoption of AI coding tools is transforming software development. With 78% of developers1 now using AI to increase productivity, the speed of innovation has never been greater. But this rapid acceleration comes with a critical risk.

Studies reveal that as much as 50% of functionally correct, AI-generated code is insecure2. This isn’t a small bug; it’s a systemic challenge. It means every time a developer uses a tool like GitHub Copilot or ChatGPT, they could be unknowingly introducing new vulnerabilities into your codebase. The result is a dangerous mix of speed and security risks that most organizations are not equipped to manage.

The Challenge of “Shadow AI”

Without a way to manage AI coding tool usage, CISO, AppSec, and engineering leaders are exposed to new risks they can't see or measure. How can you answer crucial questions like:

- What percentage of our code is AI-generated?

- Which AI models are developers using?

- Do developers have the security proficiency to spot and fix flaws in the code the AI produces?

- What vulnerabilities are being generated by different models?

- How can we enforce a security policy for AI-assisted development?

The lack of visibility and governance creates a new layer of risk and uncertainty. It’s the very definition of “shadow IT,” but for your codebase.

Our Solution – Trust Agent: AI

We believe you can have both speed and security. We're proud to launch Trust Agent: AI, a powerful new capability of our Trust Agent product that provides the deep observability and control you need to confidently embrace AI in your software development lifecycle. Using a unique combination of signals, Trust Agent: AI provides:

- Visibility: See which developers are using which AI coding tools and LLMs, and on what codebases. No more "shadow AI."

- Risk Metrics: Connect AI-generated code to a developer's skill level and introduced vulnerabilities to understand the true risk being introduced, at the commit level.

- Governance: Automate policy enforcement to ensure AI-enabled developers meet secure coding standards.

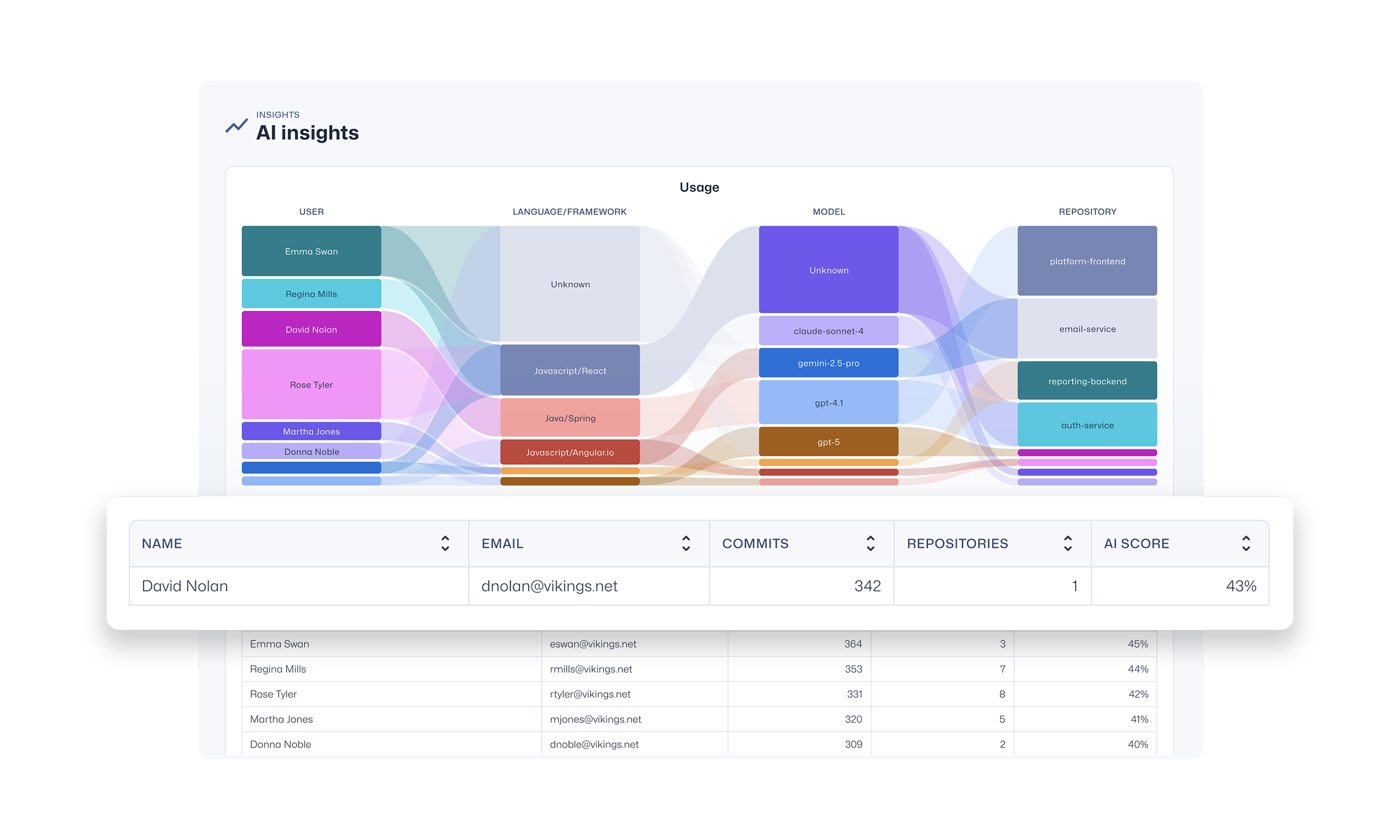

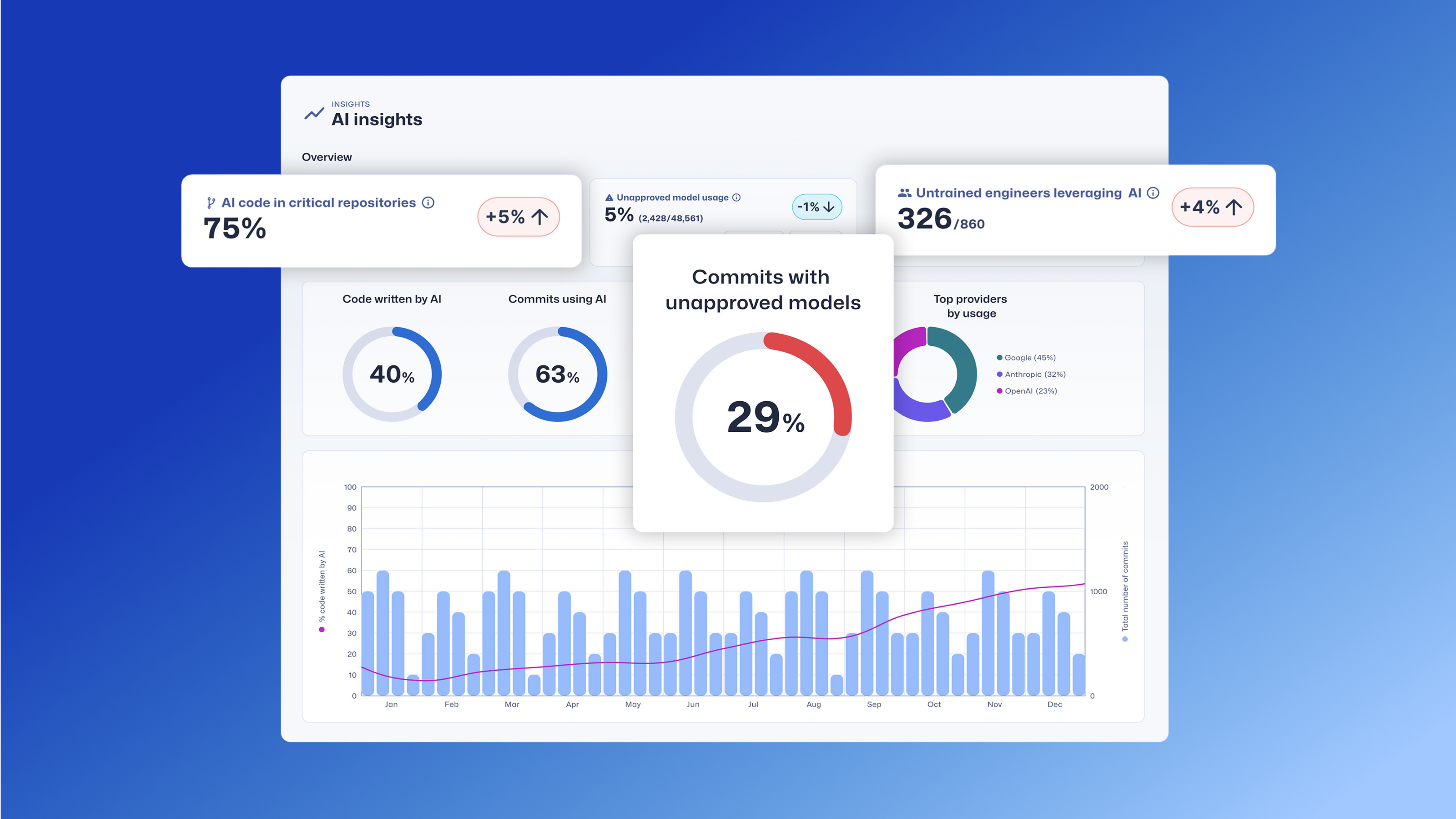

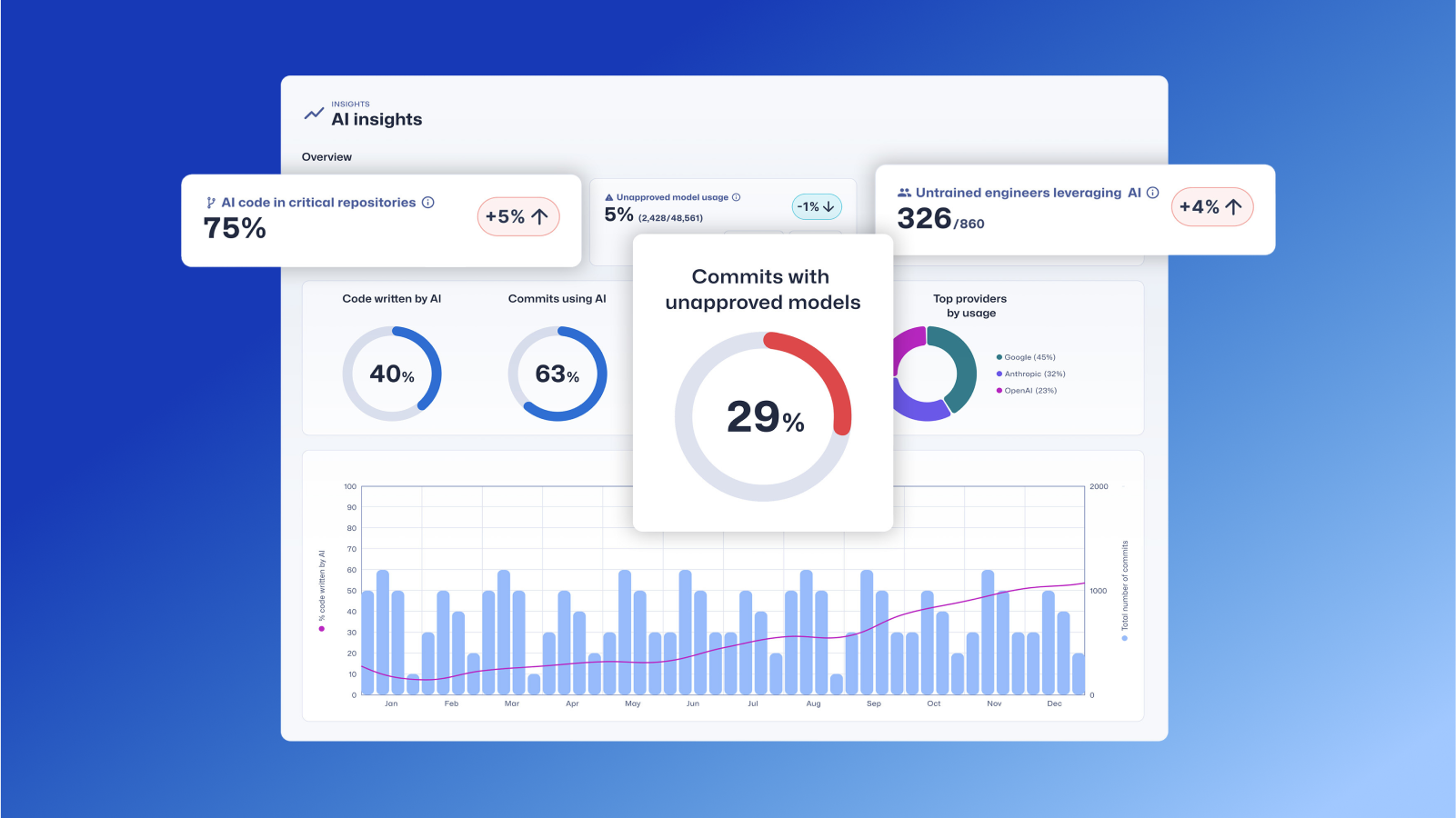

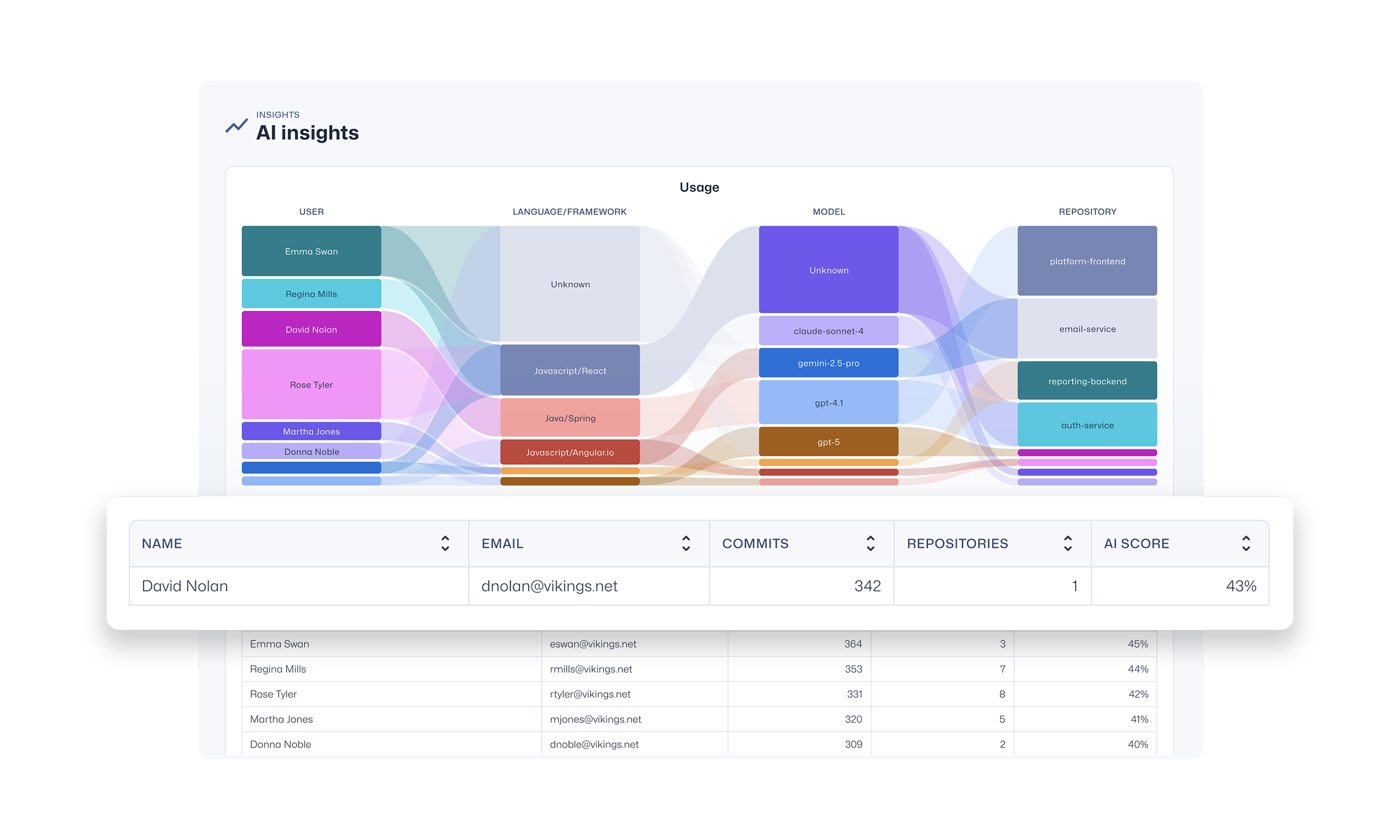

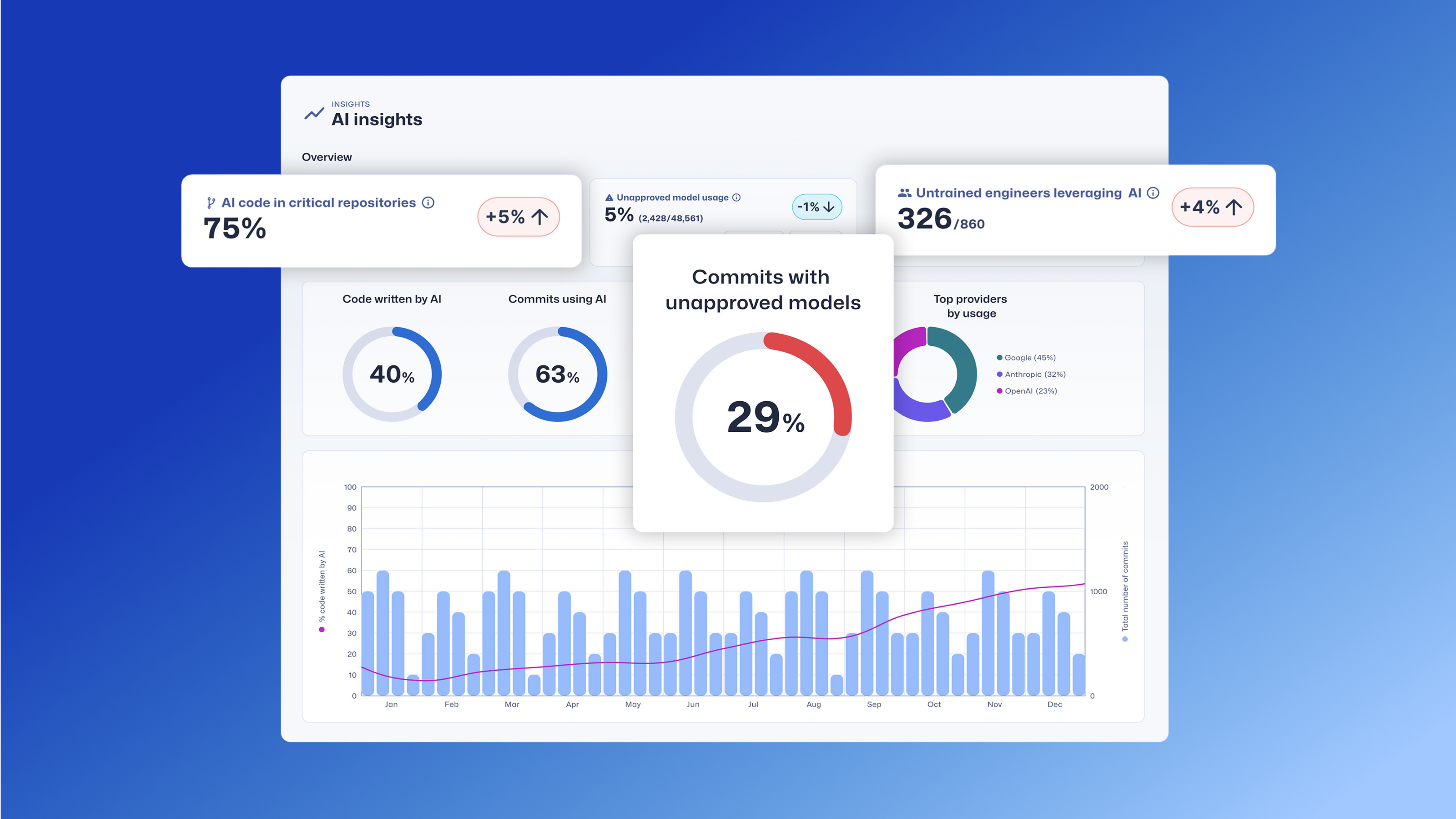

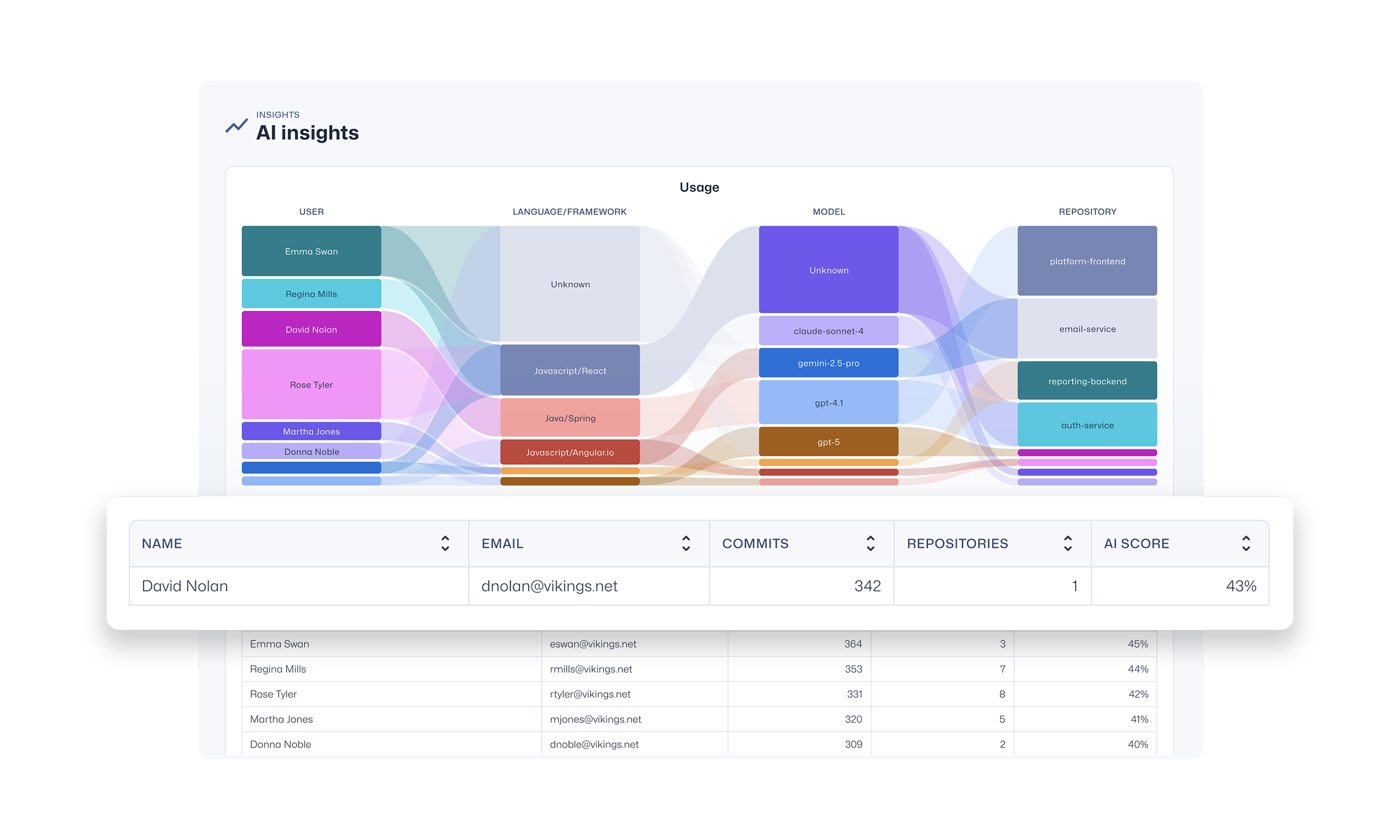

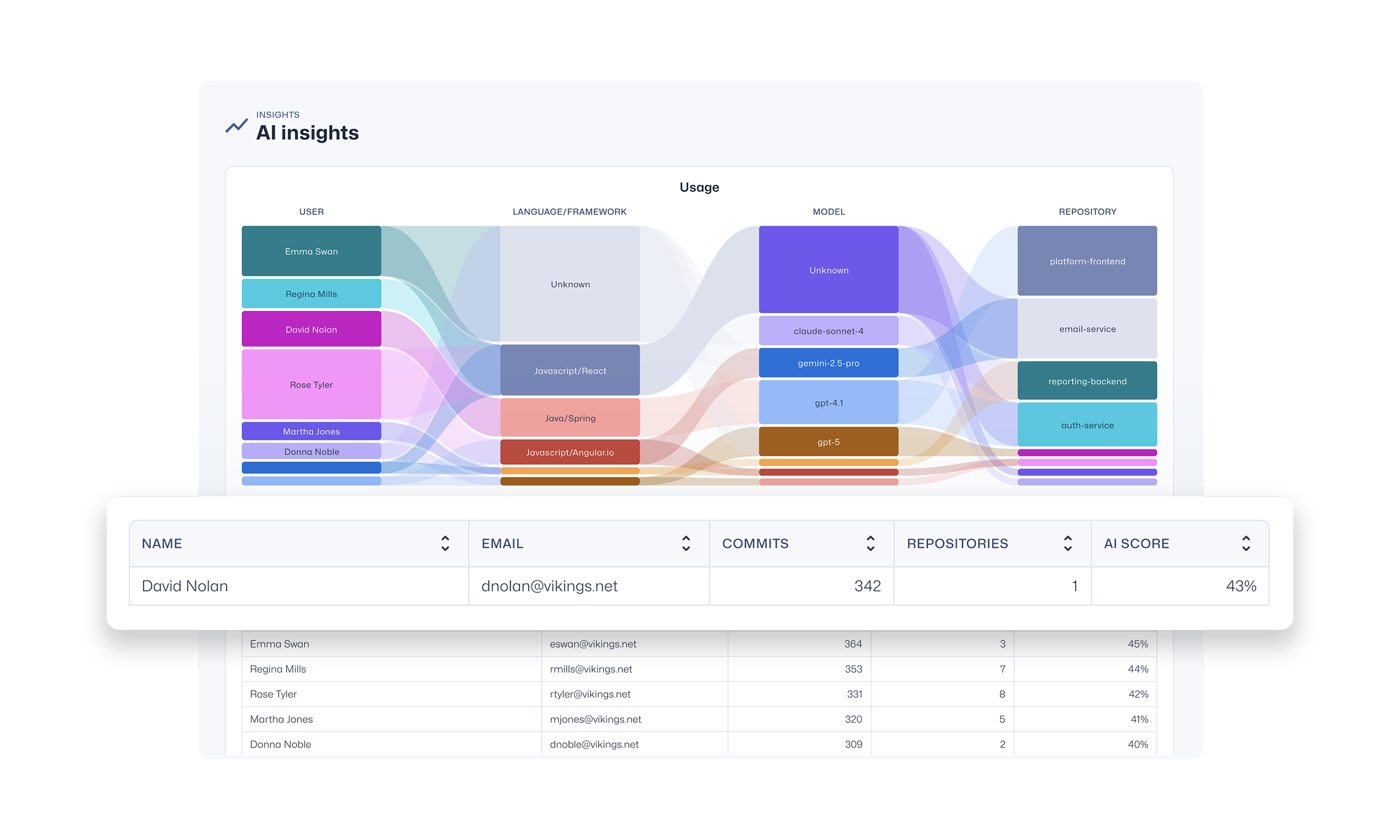

The Trust Agent: AI dashboard provides insights into AI coding tool usage, contributing developer, code repository, and more.

The Developer's Role: The Last Line of Defense

While Trust Agent: AI gives you unprecedented governance, we know that the developer remains the last, and most critical, line of defense. The most effective way to manage AI-generated code risk is to ensure your developers have the security skills to review, validate, and secure that code. This is where SCW Learning, part of our comprehensive Developer Risk Management platform, comes in. With SCW Learning, we equip your developers with the hands-on skills they need to safely leverage AI for productivity. The SCW Learning product includes:

- SCW Trust Score: An industry-first benchmark that quantifies developer security proficiency, enabling you to identify which developers are best equipped to handle AI-generated code.

- AI Challenges: Interactive, real-world coding challenges that specifically teach developers how to find and fix vulnerabilities in AI-generated code.

- Targeted Learning: Curated learning paths from 200+ AI Challenges, Guidelines, Walkthroughs, Missions, Quests, and Courses that reinforce secure coding principles and help developers master the security skills needed to mitigate AI risks.

By combining the powerful governance of Trust Agent: AI with the skill development of our learning product, you can create a truly secure SDLC. You’ll be able to identify risk, enforce policy, and empower your developers to build code faster and more securely than ever before.

Ready to Secure Your AI Journey?

The era of AI is here, and as a product manager, my goal is to build solutions that don't just solve today's problems but anticipate tomorrow's. Trust Agent: AI is designed to do just that, giving you the visibility and control to manage AI risks while empowering your teams to innovate. The early access beta is now live, and we'd love for you to be a part of it. This isn't just about a new product; it's about pioneering a new standard for secure software development in an AI-first world.

Join the Early Access Waitlist today!

Learn how Trust Agent: AI provides deep visibility and governance over AI-generated code, empowering organizations to innovate faster and more securely.

Secure Code Warrior is here for your organization to help you secure code across the entire software development lifecycle and create a culture in which cybersecurity is top of mind. Whether you’re an AppSec Manager, Developer, CISO, or anyone involved in security, we can help your organization reduce risks associated with insecure code.

Book a demoTamim Noorzad, a Director of Product Management at Secure Code Warrior, is an engineer turned product manager with over 17 years of experience, specializing in SaaS 0-to-1 products.

The widespread adoption of AI coding tools is transforming software development. With 78% of developers1 now using AI to increase productivity, the speed of innovation has never been greater. But this rapid acceleration comes with a critical risk.

Studies reveal that as much as 50% of functionally correct, AI-generated code is insecure2. This isn’t a small bug; it’s a systemic challenge. It means every time a developer uses a tool like GitHub Copilot or ChatGPT, they could be unknowingly introducing new vulnerabilities into your codebase. The result is a dangerous mix of speed and security risks that most organizations are not equipped to manage.

The Challenge of “Shadow AI”

Without a way to manage AI coding tool usage, CISO, AppSec, and engineering leaders are exposed to new risks they can't see or measure. How can you answer crucial questions like:

- What percentage of our code is AI-generated?

- Which AI models are developers using?

- Do developers have the security proficiency to spot and fix flaws in the code the AI produces?

- What vulnerabilities are being generated by different models?

- How can we enforce a security policy for AI-assisted development?

The lack of visibility and governance creates a new layer of risk and uncertainty. It’s the very definition of “shadow IT,” but for your codebase.

Our Solution – Trust Agent: AI

We believe you can have both speed and security. We're proud to launch Trust Agent: AI, a powerful new capability of our Trust Agent product that provides the deep observability and control you need to confidently embrace AI in your software development lifecycle. Using a unique combination of signals, Trust Agent: AI provides:

- Visibility: See which developers are using which AI coding tools and LLMs, and on what codebases. No more "shadow AI."

- Risk Metrics: Connect AI-generated code to a developer's skill level and introduced vulnerabilities to understand the true risk being introduced, at the commit level.

- Governance: Automate policy enforcement to ensure AI-enabled developers meet secure coding standards.

The Trust Agent: AI dashboard provides insights into AI coding tool usage, contributing developer, code repository, and more.

The Developer's Role: The Last Line of Defense

While Trust Agent: AI gives you unprecedented governance, we know that the developer remains the last, and most critical, line of defense. The most effective way to manage AI-generated code risk is to ensure your developers have the security skills to review, validate, and secure that code. This is where SCW Learning, part of our comprehensive Developer Risk Management platform, comes in. With SCW Learning, we equip your developers with the hands-on skills they need to safely leverage AI for productivity. The SCW Learning product includes:

- SCW Trust Score: An industry-first benchmark that quantifies developer security proficiency, enabling you to identify which developers are best equipped to handle AI-generated code.

- AI Challenges: Interactive, real-world coding challenges that specifically teach developers how to find and fix vulnerabilities in AI-generated code.

- Targeted Learning: Curated learning paths from 200+ AI Challenges, Guidelines, Walkthroughs, Missions, Quests, and Courses that reinforce secure coding principles and help developers master the security skills needed to mitigate AI risks.

By combining the powerful governance of Trust Agent: AI with the skill development of our learning product, you can create a truly secure SDLC. You’ll be able to identify risk, enforce policy, and empower your developers to build code faster and more securely than ever before.

Ready to Secure Your AI Journey?

The era of AI is here, and as a product manager, my goal is to build solutions that don't just solve today's problems but anticipate tomorrow's. Trust Agent: AI is designed to do just that, giving you the visibility and control to manage AI risks while empowering your teams to innovate. The early access beta is now live, and we'd love for you to be a part of it. This isn't just about a new product; it's about pioneering a new standard for secure software development in an AI-first world.

Join the Early Access Waitlist today!

The widespread adoption of AI coding tools is transforming software development. With 78% of developers1 now using AI to increase productivity, the speed of innovation has never been greater. But this rapid acceleration comes with a critical risk.

Studies reveal that as much as 50% of functionally correct, AI-generated code is insecure2. This isn’t a small bug; it’s a systemic challenge. It means every time a developer uses a tool like GitHub Copilot or ChatGPT, they could be unknowingly introducing new vulnerabilities into your codebase. The result is a dangerous mix of speed and security risks that most organizations are not equipped to manage.

The Challenge of “Shadow AI”

Without a way to manage AI coding tool usage, CISO, AppSec, and engineering leaders are exposed to new risks they can't see or measure. How can you answer crucial questions like:

- What percentage of our code is AI-generated?

- Which AI models are developers using?

- Do developers have the security proficiency to spot and fix flaws in the code the AI produces?

- What vulnerabilities are being generated by different models?

- How can we enforce a security policy for AI-assisted development?

The lack of visibility and governance creates a new layer of risk and uncertainty. It’s the very definition of “shadow IT,” but for your codebase.

Our Solution – Trust Agent: AI

We believe you can have both speed and security. We're proud to launch Trust Agent: AI, a powerful new capability of our Trust Agent product that provides the deep observability and control you need to confidently embrace AI in your software development lifecycle. Using a unique combination of signals, Trust Agent: AI provides:

- Visibility: See which developers are using which AI coding tools and LLMs, and on what codebases. No more "shadow AI."

- Risk Metrics: Connect AI-generated code to a developer's skill level and introduced vulnerabilities to understand the true risk being introduced, at the commit level.

- Governance: Automate policy enforcement to ensure AI-enabled developers meet secure coding standards.

The Trust Agent: AI dashboard provides insights into AI coding tool usage, contributing developer, code repository, and more.

The Developer's Role: The Last Line of Defense

While Trust Agent: AI gives you unprecedented governance, we know that the developer remains the last, and most critical, line of defense. The most effective way to manage AI-generated code risk is to ensure your developers have the security skills to review, validate, and secure that code. This is where SCW Learning, part of our comprehensive Developer Risk Management platform, comes in. With SCW Learning, we equip your developers with the hands-on skills they need to safely leverage AI for productivity. The SCW Learning product includes:

- SCW Trust Score: An industry-first benchmark that quantifies developer security proficiency, enabling you to identify which developers are best equipped to handle AI-generated code.

- AI Challenges: Interactive, real-world coding challenges that specifically teach developers how to find and fix vulnerabilities in AI-generated code.

- Targeted Learning: Curated learning paths from 200+ AI Challenges, Guidelines, Walkthroughs, Missions, Quests, and Courses that reinforce secure coding principles and help developers master the security skills needed to mitigate AI risks.

By combining the powerful governance of Trust Agent: AI with the skill development of our learning product, you can create a truly secure SDLC. You’ll be able to identify risk, enforce policy, and empower your developers to build code faster and more securely than ever before.

Ready to Secure Your AI Journey?

The era of AI is here, and as a product manager, my goal is to build solutions that don't just solve today's problems but anticipate tomorrow's. Trust Agent: AI is designed to do just that, giving you the visibility and control to manage AI risks while empowering your teams to innovate. The early access beta is now live, and we'd love for you to be a part of it. This isn't just about a new product; it's about pioneering a new standard for secure software development in an AI-first world.

Join the Early Access Waitlist today!

Click on the link below and download the PDF of this resource.

Secure Code Warrior is here for your organization to help you secure code across the entire software development lifecycle and create a culture in which cybersecurity is top of mind. Whether you’re an AppSec Manager, Developer, CISO, or anyone involved in security, we can help your organization reduce risks associated with insecure code.

View reportBook a demoTamim Noorzad, a Director of Product Management at Secure Code Warrior, is an engineer turned product manager with over 17 years of experience, specializing in SaaS 0-to-1 products.

The widespread adoption of AI coding tools is transforming software development. With 78% of developers1 now using AI to increase productivity, the speed of innovation has never been greater. But this rapid acceleration comes with a critical risk.

Studies reveal that as much as 50% of functionally correct, AI-generated code is insecure2. This isn’t a small bug; it’s a systemic challenge. It means every time a developer uses a tool like GitHub Copilot or ChatGPT, they could be unknowingly introducing new vulnerabilities into your codebase. The result is a dangerous mix of speed and security risks that most organizations are not equipped to manage.

The Challenge of “Shadow AI”

Without a way to manage AI coding tool usage, CISO, AppSec, and engineering leaders are exposed to new risks they can't see or measure. How can you answer crucial questions like:

- What percentage of our code is AI-generated?

- Which AI models are developers using?

- Do developers have the security proficiency to spot and fix flaws in the code the AI produces?

- What vulnerabilities are being generated by different models?

- How can we enforce a security policy for AI-assisted development?

The lack of visibility and governance creates a new layer of risk and uncertainty. It’s the very definition of “shadow IT,” but for your codebase.

Our Solution – Trust Agent: AI

We believe you can have both speed and security. We're proud to launch Trust Agent: AI, a powerful new capability of our Trust Agent product that provides the deep observability and control you need to confidently embrace AI in your software development lifecycle. Using a unique combination of signals, Trust Agent: AI provides:

- Visibility: See which developers are using which AI coding tools and LLMs, and on what codebases. No more "shadow AI."

- Risk Metrics: Connect AI-generated code to a developer's skill level and introduced vulnerabilities to understand the true risk being introduced, at the commit level.

- Governance: Automate policy enforcement to ensure AI-enabled developers meet secure coding standards.

The Trust Agent: AI dashboard provides insights into AI coding tool usage, contributing developer, code repository, and more.

The Developer's Role: The Last Line of Defense

While Trust Agent: AI gives you unprecedented governance, we know that the developer remains the last, and most critical, line of defense. The most effective way to manage AI-generated code risk is to ensure your developers have the security skills to review, validate, and secure that code. This is where SCW Learning, part of our comprehensive Developer Risk Management platform, comes in. With SCW Learning, we equip your developers with the hands-on skills they need to safely leverage AI for productivity. The SCW Learning product includes:

- SCW Trust Score: An industry-first benchmark that quantifies developer security proficiency, enabling you to identify which developers are best equipped to handle AI-generated code.

- AI Challenges: Interactive, real-world coding challenges that specifically teach developers how to find and fix vulnerabilities in AI-generated code.

- Targeted Learning: Curated learning paths from 200+ AI Challenges, Guidelines, Walkthroughs, Missions, Quests, and Courses that reinforce secure coding principles and help developers master the security skills needed to mitigate AI risks.

By combining the powerful governance of Trust Agent: AI with the skill development of our learning product, you can create a truly secure SDLC. You’ll be able to identify risk, enforce policy, and empower your developers to build code faster and more securely than ever before.

Ready to Secure Your AI Journey?

The era of AI is here, and as a product manager, my goal is to build solutions that don't just solve today's problems but anticipate tomorrow's. Trust Agent: AI is designed to do just that, giving you the visibility and control to manage AI risks while empowering your teams to innovate. The early access beta is now live, and we'd love for you to be a part of it. This isn't just about a new product; it's about pioneering a new standard for secure software development in an AI-first world.

Join the Early Access Waitlist today!

Table of contents

Secure Code Warrior is here for your organization to help you secure code across the entire software development lifecycle and create a culture in which cybersecurity is top of mind. Whether you’re an AppSec Manager, Developer, CISO, or anyone involved in security, we can help your organization reduce risks associated with insecure code.

Book a demoDownloadResources to get you started

Trust Agent: AI by Secure Code Warrior

This one-pager introduces SCW Trust Agent: AI, a new set of capabilities that provide deep observability and governance over AI coding tools. Learn how our solution uniquely correlates AI tool usage with developer skills to help you manage risk, optimize your SDLC, and ensure every line of AI-generated code is secure.

Vibe Coding: Practical Guide to Updating Your AppSec Strategy for AI

Watch on-demand to learn how to empower AppSec managers to become AI enablers, rather than blockers, through a practical, training-first approach. We'll show you how to leverage Secure Code Warrior (SCW) to strategically update your AppSec strategy for the age of AI coding assistants.

.avif)

.png)

.avif)

.avif)