Coders Conquer Security OWASP Top 10 API Series - Lack of Resources and Rate Limiting

With the lack of resources and rate limiting, API vulnerability acts almost exactly how it's described by the title. Every API has limited resources and computing power available to it depending on its environment. Most are also required to field requests from users or other programs asking it to perform its desired function. This vulnerability occurs when too many requests come in at the same time, and the API does not have enough computing resources to handle those requests. The API can then become unavailable or unresponsive to new requests.

APIs become vulnerable to this problem if their rate or resource limits are not set correctly, or if limits are left undefined in the code. An API can then be overloaded if, for example, a business experiences a particularly busy period. But it's also a security vulnerability, because threat actors can purposely overload unprotected APIs with requests in order to perform Denial of Service (DDoS) attacks.

By the way, how are you doing with the API gamified challenges so far? If you want to try your skills in handling a rate limiting vulnerability right now, step into the arena:

Now, let's go a little deeper.

What are some examples of the lack of resources and rate limiting API vulnerability?

There are two ways that this vulnerability can sneak into an API. The first is when a coder simply doesn't define what the throttle rates should be for an API. There might be a default setting for throttle rates somewhere in the infrastructure, but relying on that is not a good policy. Instead, each API should have its rates set individually. This is especially true because APIs can have vastly different functions as well as available resources.

For example, an internal API designed to serve just a few users could have a very low throttle rate and work just fine. But a public-facing API that is part of a live eCommerce site would most likely need an exceptionally high rate defined to compensate for the possibility of a surge in simultaneous users. In both cases, the throttling rates should be defined based on the expected needs, the number of potential users, and the available computing power.

It might be tempting, especially with APIs that will most likely be very busy, to set the rates to unlimited in order to try and maximize performance. This could be accomplished with a simple bit of code (as an example, we'll use the Python Django REST framework):

"DEFAULT_THROTTLE_RATES: {

"anon: None,

"user: None

In that example, both anonymous users and those known to the system can contact the API an unlimited number of times without regard to the number of requests over time. This is a bad idea because no matter how much computing resources an API has available, attackers can deploy things like botnets to eventually slow it to a crawl or possibly knock it offline altogether. When that happens, valid users will be denied access and the attack will be successful.

Eliminating Lack of Resources and Rate Limiting Problems

Every API that is deployed by an organization should have its throttle rates defined in its code. This could include things like execution timeouts, maximum allowable memory, the number of records per page that can be returned to a user, or the number of processes permitted within a defined timeframe.

From the above example, instead of leaving the throttling rates wide open, they could be tightly defined with different rates for anonymous and known users.

"DEFAULT_THROTTLE_RATES: {

"anon: config("THROTTLE_ANON, default=200/hour),

"user: config("THROTTLE_USER, default=5000/hour)

In the new example, the API would limit anonymous users to making 200 requests per hour. Known users who are already vetted by the system are given more leeway at 5,000 requests per hour. But even they are limited to prevent an accidental overload at peak times or to compensate if a user account is compromised and used for a denial of service attack.

As a final good practice to consider, it's a good idea to display a notification to users when they have reached the throttling limits along with an explanation as to when those limits will be reset. That way, valid users will know why an application is rejecting their requests. This can also be helpful if valid users doing approved tasks are denied access to an API because it can signal operations personnel that the throttling needs to be increased.

Check out the Secure Code Warrior blog pages for more insight about this vulnerability and how to protect your organization and customers from the ravages of other security flaws. You can also try a demo of the Secure Code Warrior training platform to keep all your cybersecurity skills honed and up-to-date.

This vulnerability occurs when too many requests come in at the same time, and the API does not have enough computing resources to handle those requests. The API can then become unavailable or unresponsive to new requests.

Matias Madou, Ph.D. is a security expert, researcher, and CTO and co-founder of Secure Code Warrior. Matias obtained his Ph.D. in Application Security from Ghent University, focusing on static analysis solutions. He later joined Fortify in the US, where he realized that it was insufficient to solely detect code problems without aiding developers in writing secure code. This inspired him to develop products that assist developers, alleviate the burden of security, and exceed customers' expectations. When he is not at his desk as part of Team Awesome, he enjoys being on stage presenting at conferences including RSA Conference, BlackHat and DefCon.

Secure Code Warrior is here for your organization to help you secure code across the entire software development lifecycle and create a culture in which cybersecurity is top of mind. Whether you’re an AppSec Manager, Developer, CISO, or anyone involved in security, we can help your organization reduce risks associated with insecure code.

Book a demoMatias Madou, Ph.D. is a security expert, researcher, and CTO and co-founder of Secure Code Warrior. Matias obtained his Ph.D. in Application Security from Ghent University, focusing on static analysis solutions. He later joined Fortify in the US, where he realized that it was insufficient to solely detect code problems without aiding developers in writing secure code. This inspired him to develop products that assist developers, alleviate the burden of security, and exceed customers' expectations. When he is not at his desk as part of Team Awesome, he enjoys being on stage presenting at conferences including RSA Conference, BlackHat and DefCon.

Matias is a researcher and developer with more than 15 years of hands-on software security experience. He has developed solutions for companies such as Fortify Software and his own company Sensei Security. Over his career, Matias has led multiple application security research projects which have led to commercial products and boasts over 10 patents under his belt. When he is away from his desk, Matias has served as an instructor for advanced application security training courses and regularly speaks at global conferences including RSA Conference, Black Hat, DefCon, BSIMM, OWASP AppSec and BruCon.

Matias holds a Ph.D. in Computer Engineering from Ghent University, where he studied application security through program obfuscation to hide the inner workings of an application.

With the lack of resources and rate limiting, API vulnerability acts almost exactly how it's described by the title. Every API has limited resources and computing power available to it depending on its environment. Most are also required to field requests from users or other programs asking it to perform its desired function. This vulnerability occurs when too many requests come in at the same time, and the API does not have enough computing resources to handle those requests. The API can then become unavailable or unresponsive to new requests.

APIs become vulnerable to this problem if their rate or resource limits are not set correctly, or if limits are left undefined in the code. An API can then be overloaded if, for example, a business experiences a particularly busy period. But it's also a security vulnerability, because threat actors can purposely overload unprotected APIs with requests in order to perform Denial of Service (DDoS) attacks.

By the way, how are you doing with the API gamified challenges so far? If you want to try your skills in handling a rate limiting vulnerability right now, step into the arena:

Now, let's go a little deeper.

What are some examples of the lack of resources and rate limiting API vulnerability?

There are two ways that this vulnerability can sneak into an API. The first is when a coder simply doesn't define what the throttle rates should be for an API. There might be a default setting for throttle rates somewhere in the infrastructure, but relying on that is not a good policy. Instead, each API should have its rates set individually. This is especially true because APIs can have vastly different functions as well as available resources.

For example, an internal API designed to serve just a few users could have a very low throttle rate and work just fine. But a public-facing API that is part of a live eCommerce site would most likely need an exceptionally high rate defined to compensate for the possibility of a surge in simultaneous users. In both cases, the throttling rates should be defined based on the expected needs, the number of potential users, and the available computing power.

It might be tempting, especially with APIs that will most likely be very busy, to set the rates to unlimited in order to try and maximize performance. This could be accomplished with a simple bit of code (as an example, we'll use the Python Django REST framework):

"DEFAULT_THROTTLE_RATES: {

"anon: None,

"user: None

In that example, both anonymous users and those known to the system can contact the API an unlimited number of times without regard to the number of requests over time. This is a bad idea because no matter how much computing resources an API has available, attackers can deploy things like botnets to eventually slow it to a crawl or possibly knock it offline altogether. When that happens, valid users will be denied access and the attack will be successful.

Eliminating Lack of Resources and Rate Limiting Problems

Every API that is deployed by an organization should have its throttle rates defined in its code. This could include things like execution timeouts, maximum allowable memory, the number of records per page that can be returned to a user, or the number of processes permitted within a defined timeframe.

From the above example, instead of leaving the throttling rates wide open, they could be tightly defined with different rates for anonymous and known users.

"DEFAULT_THROTTLE_RATES: {

"anon: config("THROTTLE_ANON, default=200/hour),

"user: config("THROTTLE_USER, default=5000/hour)

In the new example, the API would limit anonymous users to making 200 requests per hour. Known users who are already vetted by the system are given more leeway at 5,000 requests per hour. But even they are limited to prevent an accidental overload at peak times or to compensate if a user account is compromised and used for a denial of service attack.

As a final good practice to consider, it's a good idea to display a notification to users when they have reached the throttling limits along with an explanation as to when those limits will be reset. That way, valid users will know why an application is rejecting their requests. This can also be helpful if valid users doing approved tasks are denied access to an API because it can signal operations personnel that the throttling needs to be increased.

Check out the Secure Code Warrior blog pages for more insight about this vulnerability and how to protect your organization and customers from the ravages of other security flaws. You can also try a demo of the Secure Code Warrior training platform to keep all your cybersecurity skills honed and up-to-date.

With the lack of resources and rate limiting, API vulnerability acts almost exactly how it's described by the title. Every API has limited resources and computing power available to it depending on its environment. Most are also required to field requests from users or other programs asking it to perform its desired function. This vulnerability occurs when too many requests come in at the same time, and the API does not have enough computing resources to handle those requests. The API can then become unavailable or unresponsive to new requests.

APIs become vulnerable to this problem if their rate or resource limits are not set correctly, or if limits are left undefined in the code. An API can then be overloaded if, for example, a business experiences a particularly busy period. But it's also a security vulnerability, because threat actors can purposely overload unprotected APIs with requests in order to perform Denial of Service (DDoS) attacks.

By the way, how are you doing with the API gamified challenges so far? If you want to try your skills in handling a rate limiting vulnerability right now, step into the arena:

Now, let's go a little deeper.

What are some examples of the lack of resources and rate limiting API vulnerability?

There are two ways that this vulnerability can sneak into an API. The first is when a coder simply doesn't define what the throttle rates should be for an API. There might be a default setting for throttle rates somewhere in the infrastructure, but relying on that is not a good policy. Instead, each API should have its rates set individually. This is especially true because APIs can have vastly different functions as well as available resources.

For example, an internal API designed to serve just a few users could have a very low throttle rate and work just fine. But a public-facing API that is part of a live eCommerce site would most likely need an exceptionally high rate defined to compensate for the possibility of a surge in simultaneous users. In both cases, the throttling rates should be defined based on the expected needs, the number of potential users, and the available computing power.

It might be tempting, especially with APIs that will most likely be very busy, to set the rates to unlimited in order to try and maximize performance. This could be accomplished with a simple bit of code (as an example, we'll use the Python Django REST framework):

"DEFAULT_THROTTLE_RATES: {

"anon: None,

"user: None

In that example, both anonymous users and those known to the system can contact the API an unlimited number of times without regard to the number of requests over time. This is a bad idea because no matter how much computing resources an API has available, attackers can deploy things like botnets to eventually slow it to a crawl or possibly knock it offline altogether. When that happens, valid users will be denied access and the attack will be successful.

Eliminating Lack of Resources and Rate Limiting Problems

Every API that is deployed by an organization should have its throttle rates defined in its code. This could include things like execution timeouts, maximum allowable memory, the number of records per page that can be returned to a user, or the number of processes permitted within a defined timeframe.

From the above example, instead of leaving the throttling rates wide open, they could be tightly defined with different rates for anonymous and known users.

"DEFAULT_THROTTLE_RATES: {

"anon: config("THROTTLE_ANON, default=200/hour),

"user: config("THROTTLE_USER, default=5000/hour)

In the new example, the API would limit anonymous users to making 200 requests per hour. Known users who are already vetted by the system are given more leeway at 5,000 requests per hour. But even they are limited to prevent an accidental overload at peak times or to compensate if a user account is compromised and used for a denial of service attack.

As a final good practice to consider, it's a good idea to display a notification to users when they have reached the throttling limits along with an explanation as to when those limits will be reset. That way, valid users will know why an application is rejecting their requests. This can also be helpful if valid users doing approved tasks are denied access to an API because it can signal operations personnel that the throttling needs to be increased.

Check out the Secure Code Warrior blog pages for more insight about this vulnerability and how to protect your organization and customers from the ravages of other security flaws. You can also try a demo of the Secure Code Warrior training platform to keep all your cybersecurity skills honed and up-to-date.

Click on the link below and download the PDF of this resource.

Secure Code Warrior is here for your organization to help you secure code across the entire software development lifecycle and create a culture in which cybersecurity is top of mind. Whether you’re an AppSec Manager, Developer, CISO, or anyone involved in security, we can help your organization reduce risks associated with insecure code.

View reportBook a demoMatias Madou, Ph.D. is a security expert, researcher, and CTO and co-founder of Secure Code Warrior. Matias obtained his Ph.D. in Application Security from Ghent University, focusing on static analysis solutions. He later joined Fortify in the US, where he realized that it was insufficient to solely detect code problems without aiding developers in writing secure code. This inspired him to develop products that assist developers, alleviate the burden of security, and exceed customers' expectations. When he is not at his desk as part of Team Awesome, he enjoys being on stage presenting at conferences including RSA Conference, BlackHat and DefCon.

Matias is a researcher and developer with more than 15 years of hands-on software security experience. He has developed solutions for companies such as Fortify Software and his own company Sensei Security. Over his career, Matias has led multiple application security research projects which have led to commercial products and boasts over 10 patents under his belt. When he is away from his desk, Matias has served as an instructor for advanced application security training courses and regularly speaks at global conferences including RSA Conference, Black Hat, DefCon, BSIMM, OWASP AppSec and BruCon.

Matias holds a Ph.D. in Computer Engineering from Ghent University, where he studied application security through program obfuscation to hide the inner workings of an application.

With the lack of resources and rate limiting, API vulnerability acts almost exactly how it's described by the title. Every API has limited resources and computing power available to it depending on its environment. Most are also required to field requests from users or other programs asking it to perform its desired function. This vulnerability occurs when too many requests come in at the same time, and the API does not have enough computing resources to handle those requests. The API can then become unavailable or unresponsive to new requests.

APIs become vulnerable to this problem if their rate or resource limits are not set correctly, or if limits are left undefined in the code. An API can then be overloaded if, for example, a business experiences a particularly busy period. But it's also a security vulnerability, because threat actors can purposely overload unprotected APIs with requests in order to perform Denial of Service (DDoS) attacks.

By the way, how are you doing with the API gamified challenges so far? If you want to try your skills in handling a rate limiting vulnerability right now, step into the arena:

Now, let's go a little deeper.

What are some examples of the lack of resources and rate limiting API vulnerability?

There are two ways that this vulnerability can sneak into an API. The first is when a coder simply doesn't define what the throttle rates should be for an API. There might be a default setting for throttle rates somewhere in the infrastructure, but relying on that is not a good policy. Instead, each API should have its rates set individually. This is especially true because APIs can have vastly different functions as well as available resources.

For example, an internal API designed to serve just a few users could have a very low throttle rate and work just fine. But a public-facing API that is part of a live eCommerce site would most likely need an exceptionally high rate defined to compensate for the possibility of a surge in simultaneous users. In both cases, the throttling rates should be defined based on the expected needs, the number of potential users, and the available computing power.

It might be tempting, especially with APIs that will most likely be very busy, to set the rates to unlimited in order to try and maximize performance. This could be accomplished with a simple bit of code (as an example, we'll use the Python Django REST framework):

"DEFAULT_THROTTLE_RATES: {

"anon: None,

"user: None

In that example, both anonymous users and those known to the system can contact the API an unlimited number of times without regard to the number of requests over time. This is a bad idea because no matter how much computing resources an API has available, attackers can deploy things like botnets to eventually slow it to a crawl or possibly knock it offline altogether. When that happens, valid users will be denied access and the attack will be successful.

Eliminating Lack of Resources and Rate Limiting Problems

Every API that is deployed by an organization should have its throttle rates defined in its code. This could include things like execution timeouts, maximum allowable memory, the number of records per page that can be returned to a user, or the number of processes permitted within a defined timeframe.

From the above example, instead of leaving the throttling rates wide open, they could be tightly defined with different rates for anonymous and known users.

"DEFAULT_THROTTLE_RATES: {

"anon: config("THROTTLE_ANON, default=200/hour),

"user: config("THROTTLE_USER, default=5000/hour)

In the new example, the API would limit anonymous users to making 200 requests per hour. Known users who are already vetted by the system are given more leeway at 5,000 requests per hour. But even they are limited to prevent an accidental overload at peak times or to compensate if a user account is compromised and used for a denial of service attack.

As a final good practice to consider, it's a good idea to display a notification to users when they have reached the throttling limits along with an explanation as to when those limits will be reset. That way, valid users will know why an application is rejecting their requests. This can also be helpful if valid users doing approved tasks are denied access to an API because it can signal operations personnel that the throttling needs to be increased.

Check out the Secure Code Warrior blog pages for more insight about this vulnerability and how to protect your organization and customers from the ravages of other security flaws. You can also try a demo of the Secure Code Warrior training platform to keep all your cybersecurity skills honed and up-to-date.

Table of contents

Matias Madou, Ph.D. is a security expert, researcher, and CTO and co-founder of Secure Code Warrior. Matias obtained his Ph.D. in Application Security from Ghent University, focusing on static analysis solutions. He later joined Fortify in the US, where he realized that it was insufficient to solely detect code problems without aiding developers in writing secure code. This inspired him to develop products that assist developers, alleviate the burden of security, and exceed customers' expectations. When he is not at his desk as part of Team Awesome, he enjoys being on stage presenting at conferences including RSA Conference, BlackHat and DefCon.

Secure Code Warrior is here for your organization to help you secure code across the entire software development lifecycle and create a culture in which cybersecurity is top of mind. Whether you’re an AppSec Manager, Developer, CISO, or anyone involved in security, we can help your organization reduce risks associated with insecure code.

Book a demoDownloadResources to get you started

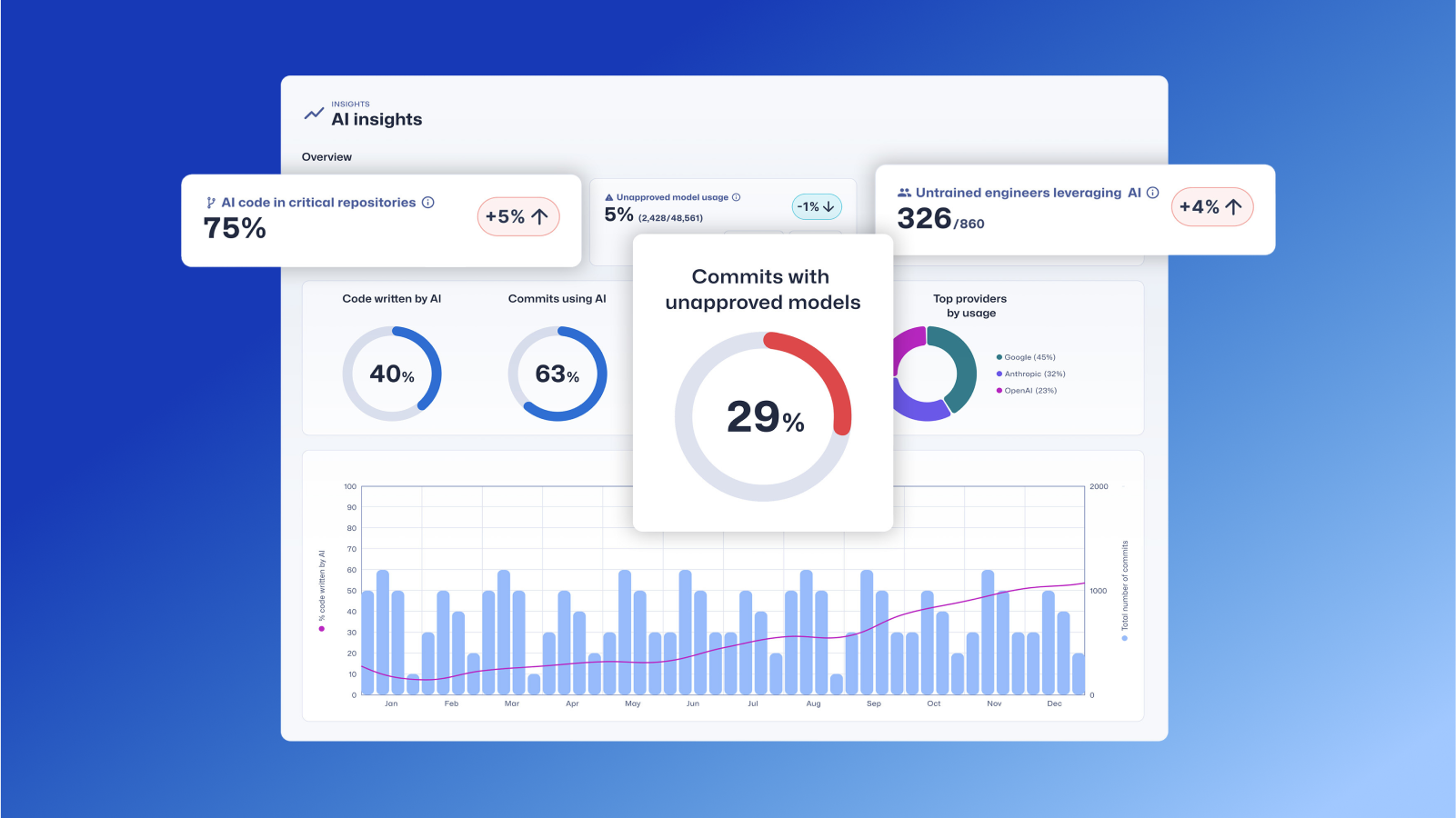

Trust Agent: AI by Secure Code Warrior

This one-pager introduces SCW Trust Agent: AI, a new set of capabilities that provide deep observability and governance over AI coding tools. Learn how our solution uniquely correlates AI tool usage with developer skills to help you manage risk, optimize your SDLC, and ensure every line of AI-generated code is secure.

Vibe Coding: Practical Guide to Updating Your AppSec Strategy for AI

Watch on-demand to learn how to empower AppSec managers to become AI enablers, rather than blockers, through a practical, training-first approach. We'll show you how to leverage Secure Code Warrior (SCW) to strategically update your AppSec strategy for the age of AI coding assistants.

.png)

.avif)

.avif)